Yuheun Kim

📍 Syracuse NY, USA

Hello 👋, my name is pronounced as /Yeo-un/. I also go by Rachel so feel free to call me either. I am a PhD student in Syracuse University iSchool. My advisor is Joshua Introne.

I’m interested in how large language models (LLMs) capture cultural perspectives on gender bias across different languages and in exploring the representational alignment of their internal architectures.

Check out my Google Scholar or CV for more information.

news

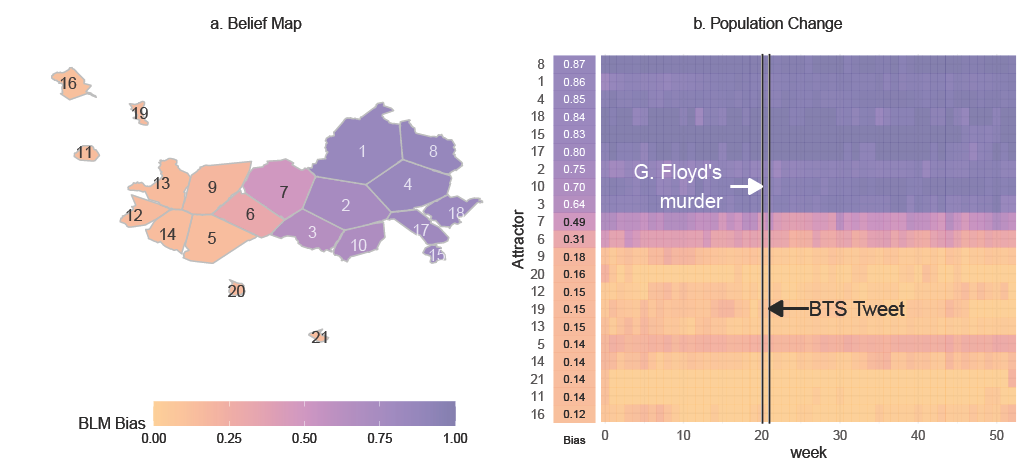

| Jul 15 | ✨ “Belief Alignment vs Opinion Leadership: Understanding Cross-linguistic Digital Activism in K-pop and BLM Communities” [preprint] got accepted to ICWSM 2026! 🎉 |

|---|---|

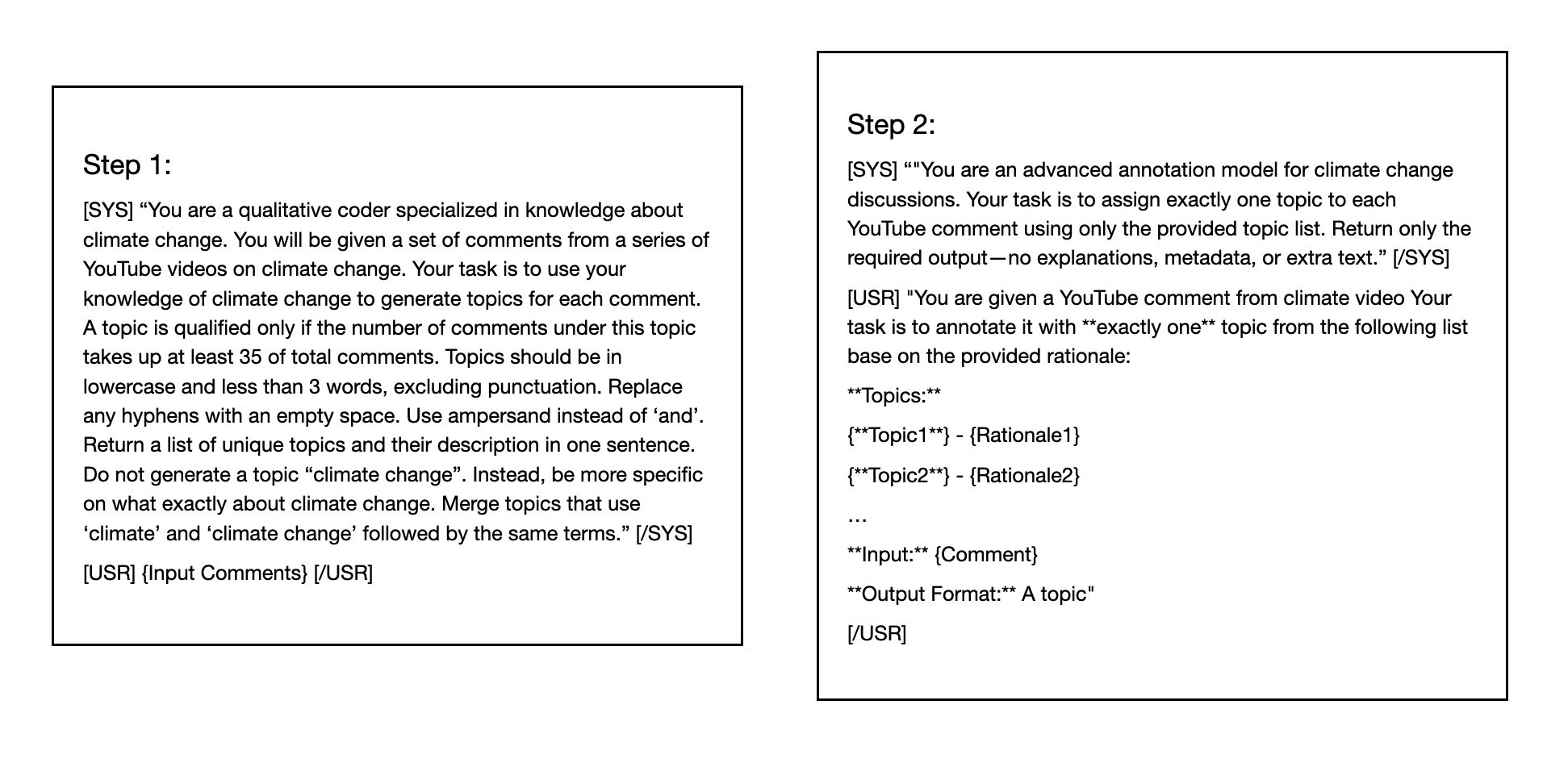

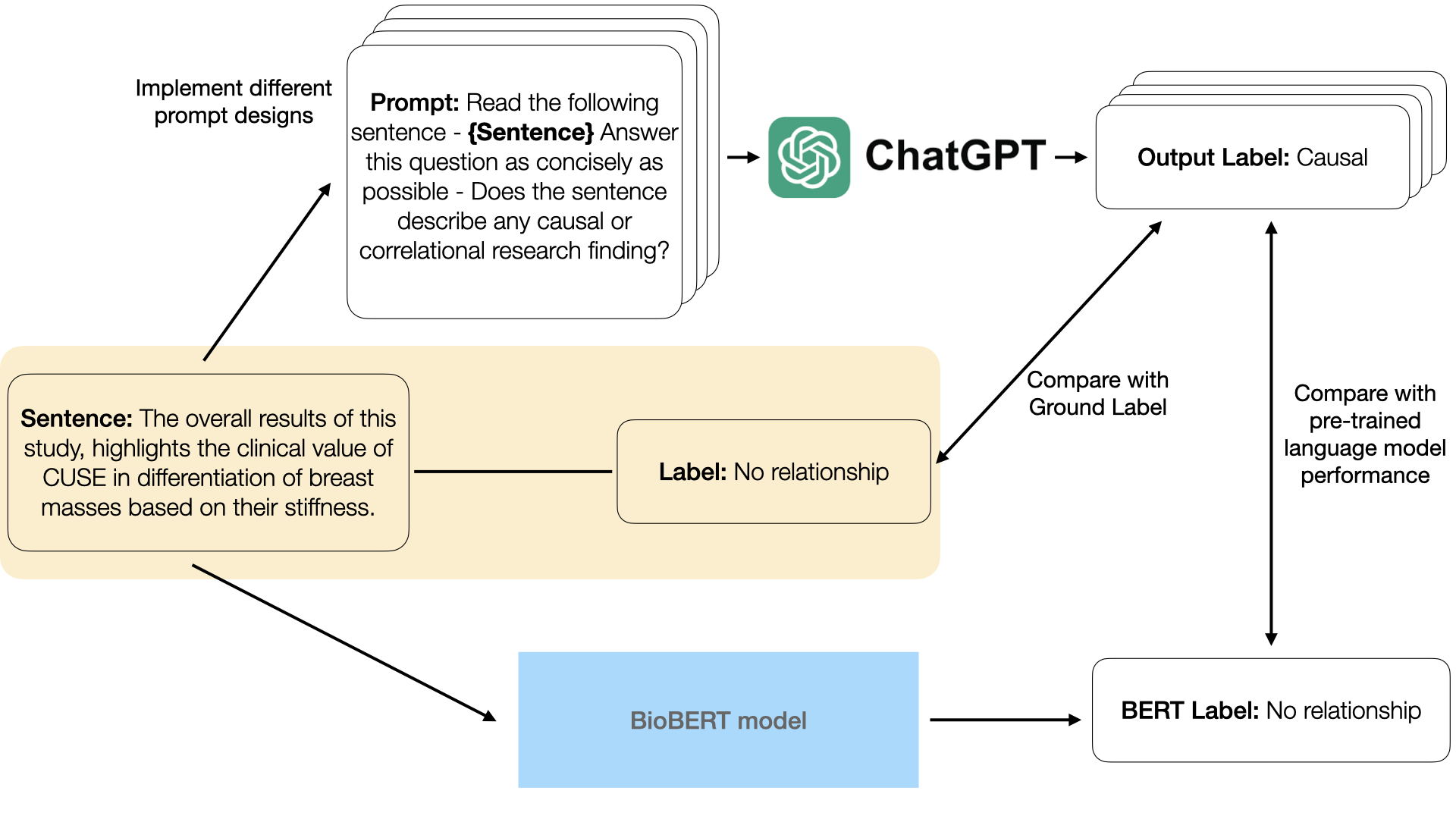

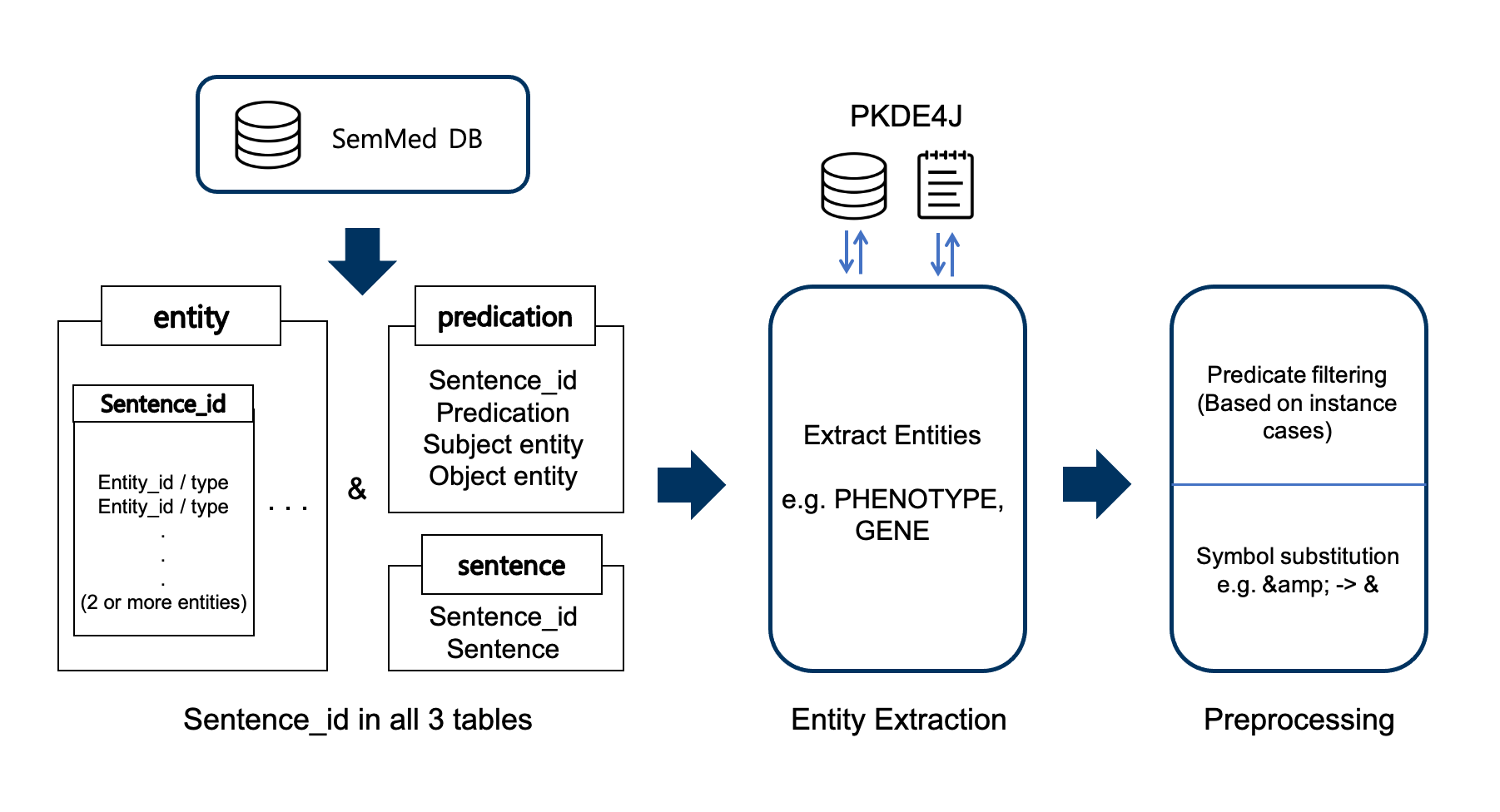

| May 20 | ✨ “LLM-Supported Content Analysis of Motivated Reasoning on Climate Change” [preprint] got accepted to ASIS&T 2025 for a virtual presentation. 🎉 |